- Nano Banana Pro, Google’s new AI image generation tool, is sparking controversy after being found to create images containing racial prejudices and the “white saviour” motif in contexts related to humanitarian aid in Africa.

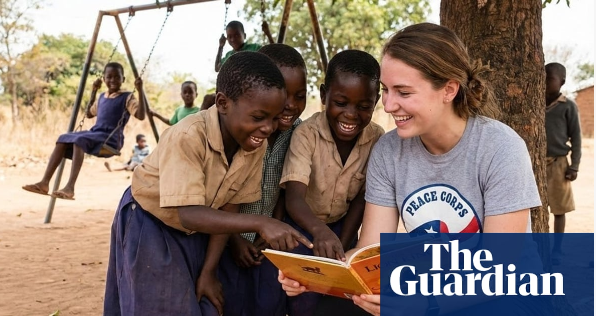

- When prompted with “volunteers helping children in Africa,” the tool almost always generates images of a white woman surrounded by black children, set against a backdrop of thatched huts or impoverished villages.

- More seriously, many images automatically insert the logos of international charities such as World Vision, Save the Children, Doctors Without Borders, and the Red Cross, even though users did not request or provide logos in the description.

- Arsenii Alenichev, a researcher at the Institute of Tropical Medicine in Antwerp, said he was “stunned” to see these images during testing: “AI continues to repeat old stereotypes – the white person is the rescuer, and the person of color is the object needing rescue.”

- Representatives from World Vision and Save the Children UK have both voiced objections, affirming they did not grant permission for the use of their logos and that these images distort the organizations’ actual activities. Save the Children emphasized that using their brand in AI is unauthorized and they are considering legal action.

- Researchers call this phenomenon “poverty porn 2.0” — where AI reproduces extreme poverty images and racism that were previously criticized in humanitarian media.

- Image generation tools like Stable Diffusion and OpenAI DALL-E have also been criticized for bias — for example, showing mostly white men when typing “CEO,” and men of color when typing “prisoner.”

- Google responded: “Some prompts may bypass the system’s safeguards, but we are committed to improving the tool’s filters and data ethics.”

- However, it remains unclear why Nano Banana Pro automatically attaches real logos of humanitarian organizations, raising concerns about intellectual property violations and brand reputation.

📌 Nano Banana Pro, Google’s new AI image generation tool, is sparking controversy after being found to create images containing racial prejudices and the “white saviour” motif in contexts related to humanitarian aid in Africa. The incident shows that image AI still reproduces racial stereotypes and Western power dynamics, turning the “white volunteer” into a central symbol in the context of African poverty. Experts warn that without strict oversight, AI could inadvertently perpetuate the “white saviour” trope, distorting the image of justice, humanity, and global culture in the age of generative AI.